How to move forward faster with AI

And how intelligent is your AI really?

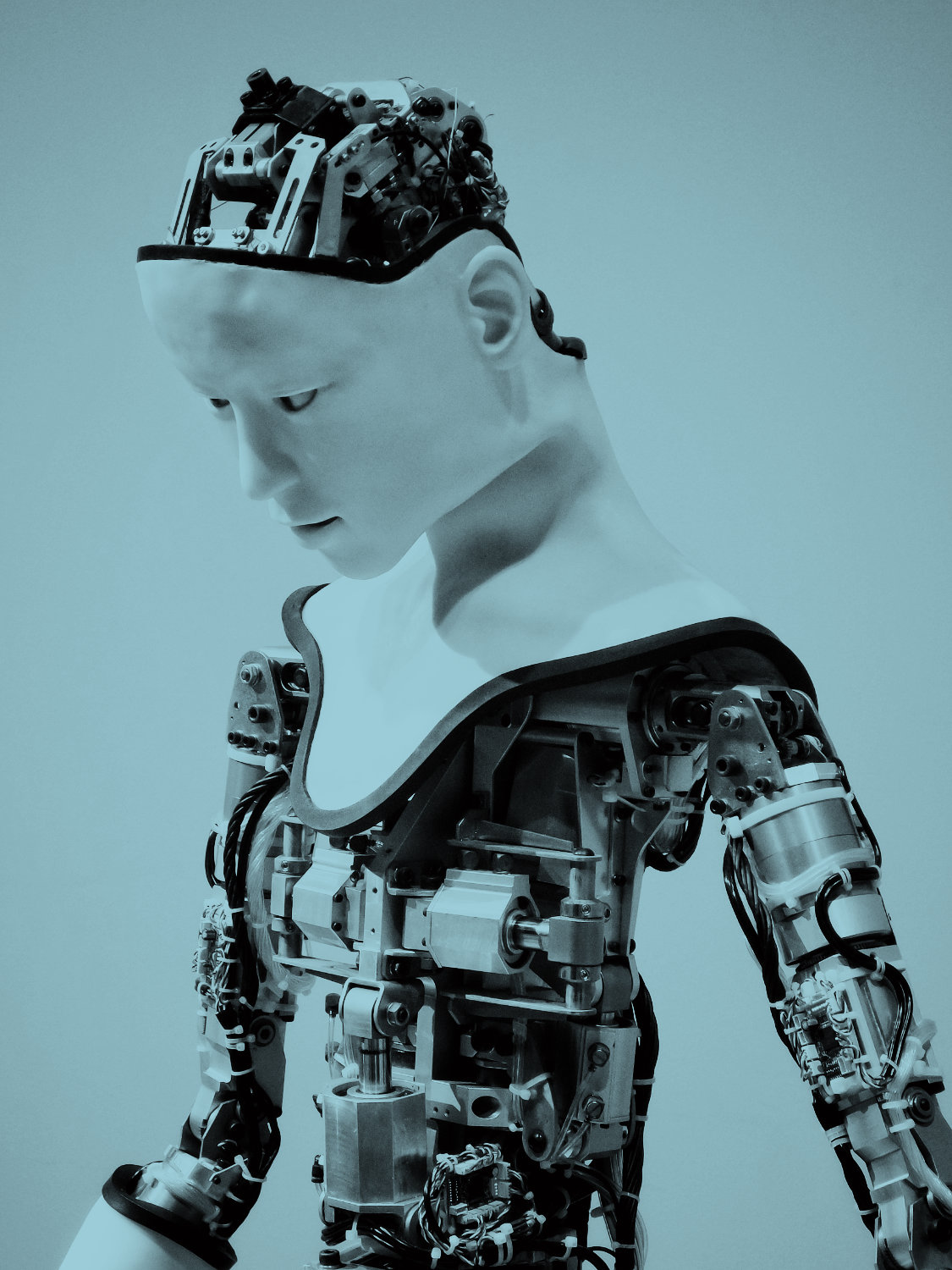

There’s hardly anything as exciting as talking about AI. We all can’t wait for a world where we interact with robots, get picked up by flying helicopters and have jobs that only consist of being creative and doing the things we love to do, leaving the tedious tasks to machines that fill in the gaps and do our admin (no more timesheets please!!). A world where we all move forward faster thanks to AI!

At Brightful we’re truly excited about the possibilities of AI. AI can transform and already is transforming the way we analyse, diagnose and research medical issues and solutions. AI helps banks with AML (anti money laundering) requirements, it helps insurers manage customer lifecycles and provide better customised pricing, it helps farmers be more efficient and sustainable in managing their farm land and animals and it can help lawyers update documents once new legislation comes into play.

In short: we believe that AI can be a real force for good and is starting to transform industries as we know it. Ethical and Responsible AI is key for AI to be sustainable and not damaging. Like any powerful tool, AI has the potential to be used for bad, which is why we strongly support Sir Tim Berner-Lee’s ‘For the Web’ initiative to create a new contract for the web.

And before you start diving into the deep end with AI it’s good to understand some of the issues and challenges when setting on the thrilling world of AI projects.

Now the theory on this varies slightly depending on the school of thought, but broadly speaking there are 4 different types of AI. The reason why it’s important to understand them, is because they vary wildly. So, when people speak about governing AI or intelligence in general it will depend on what level of AI we’re talking about.

AI in general is often defined as algorithms combined with data. How smart your AI is will depend on how sophisticated your algorithms are, and how much data it can draw upon. But also how much freedom and flexibility the algorithms have to draw their own conclusions and capability to act on their conclusions.

So in terms of types of AI there are currently 4:

What is the key differentiator between those types?

Reactive AI is all about the here and now. It’s the likes of IBM’s Deep Blue chess computer that can respond to information that is right in front of it. This type of AI doesn’t care about what has happened in the past, how the other players feel or could react. It simply calculates through potential moves and picks the best one. Some argue that this is the only type of AI we should get involved in. And you might see why in a minute.

This type of AI can draw upon some memory and limited observations. This type of AI is used in driverless cars for example. AI will add its own observations of the world to their pre-programmed representations. However, this type of AI is still limited and it doesn’t respond to emotional cues from other beings, so someone with 20 years of driving experience will most likely still be a much better driver than AI (we hope). At the moment we are unable to create AI that creates its full own representations yet, but it’s something that is being explored.

Representations are internal models of the environment that can provide guidance to a behaving agent, even in the absence of sensory information. MIT Press Journals

This type of AI doesn’t yet exist but the best way to describe it, is that this is AI that understands other people’s worlds. So it doesn’t just rely on its own representation but also understands emotions of others and responds to it accordingly. The BB-8 Droid or R2D2 comes to mind as he communicates with Luke Skywalker and responds to his feelings.

Last but not least there’s fully self aware AI.

Conscious beings are aware of themselves, know about their internal states, and are able to predict feelings of others. ‘Understanding the four types of artificial intelligence’

This type of AI of course raises some interesting ethical and moral challenges. For instance, for now AI cannot own any IP or patents even if it’s the algorithm that has come up with a novel solution. However, as AI progresses and changes we may have to revisit how we perceive AI.

So is artificial intelligence really intelligent? And what constitutes intelligence? Is it the ability to be creative? Is it self-awareness and conscious decision-making? We are still in the early stages of exploring these answers, but in the mean time it’s crucial to focus on the following key areas:

The data challenge is vast and comes in many disguises. It can be categorised into the following issues:

We often find that tech companies own or create the sexy algorithms that can do amazing things when fed the right and the right amount of data. And this where a lot of large corporates or other businesses and organisations come into play that own the data. It could be banking, financial, insurer data or medical data. Now the complex legal side of permission based processing for different purposes aside you can quickly see how some of these data sets may be a) huge, b) outdated, c) unstructured. Imagine you taught a toddler that a spider is called a ‘house’ and a house is called a ‘spider’. This very simple example shows the data challenge you may have at hand when working on an AI project. False entries, false labelling can wreak havoc. No wonder then, that 8/10 projects stall not necessarily to coding or algorithm issues, but to data issues.

Another thing to consider is bias in data and algorithms. Many people believe that algorithms are unbiased and more objective.

But as author and data scientist Cathy O’Neil reveals the truth about algorithms:

Algorithms make things work for the builder of the algorithms. We have to question algorithms. Especially if they’re important to us.

Algorithms are essentially looking for trends and patterns. And some of those conclusions could make us all feel VERY uncomfortable. Which is why initiatives such as the Citizens’ Jury by the RSA are very interesting. Whilst we may enjoy personalised shopping and holiday suggestions by AI, if AI is deployed to make more serious decisions about whether you are successful in your job application or may end up in prison opinions start to turn very quickly.

But taking the challenges to one side, just imagine what would be possible if we could remove some of the barriers between industries and gain much more insight through cross-sharing of data. It could benefit society immensely. If data owned by insurers would help medical researchers in finding health triggers (rather than just increasing your health premium) or if data owned by energy companies could be used by governments to create smarter and more sustainable cities. These are just a few benefits – for more exciting and real life examples of AI make sure to download our AI presentation at the end of this article!

We discussed the different types of AI and the data challenge but one key aspect that can’t be ignored is the human element. Many AI projects fail because the wrong type of pilot has been chosen, or there is little AI readiness or understanding on how to embed AI successfully in the organisations.

Some of the key challenges we come across are:

When embedding AI into an organisation it’s often driven by more efficiency and more profit. This makes people fearful of their jobs. Competing agendas can also impact on the success of an AI project. Many of these potential roadblocks need to be carefully planned for and it’s crucial to have people on your team that are great communicators and not just data scientists. Because for AI to be successful it needs to work for people. Having great understanding of human nature, internal processes and keeping people informed will go a long way in ensuring that your project will get off to a better start.

Before starting on any AI project, it’s important to work out how your business or organisation will benefit from using AI. In order to avoid wasting time and energy on a ‘me-too’ innovation project we strongly recommend starting with a one day ‘Problem framing workshop’ which aligns senior stakeholders and sets the strategy and priorities at the beginning.

We’ve briefly touched some of the ethical challenges around AI when talking about bias in data and algorithms. And to be honest, we’ve only scratched the surface of some of the governance and ethical issues you might come across when using AI. The questions abound: who should be responsible to govern and police AI? How can the industry be more transparent and responsible about how AI is used?

We are delighted to see a growing trend by industry leaders speaking out on the subject, such as Sir Tim Berners-Lee‘s latest Web Foundation campaign for a Global Contract for the Web and Google’s ‘Explainable AI’, which is developing frameworks to make AI more ethical, responsible and transparent. We are pleased the industry is moving towards a more mature conversation about what Responsible AI looks like.

Here at Brightful we are very passionate about Responsible AI and it’s our mission to make people aware of what AI can achieve. We’re in full support of transparency in AI and its deployment for good causes. We see it as a force for good and we are mindful of its consequences. If you feel the same way and want to stay informed with some of the latest developments in Responsible AI make sure to sign up to our newsletter since we’ll continue to share our views and ideas on ‘Responsible AI’.